tldr: This post demonstrates that GANs are capable of breaking image hash algorithms in two key ways: (1) Reversal Attack: Synthesizing the original image from the hash (2) Poisoning Attack: synthesizing hash collisions for arbitrary natural image distributions.

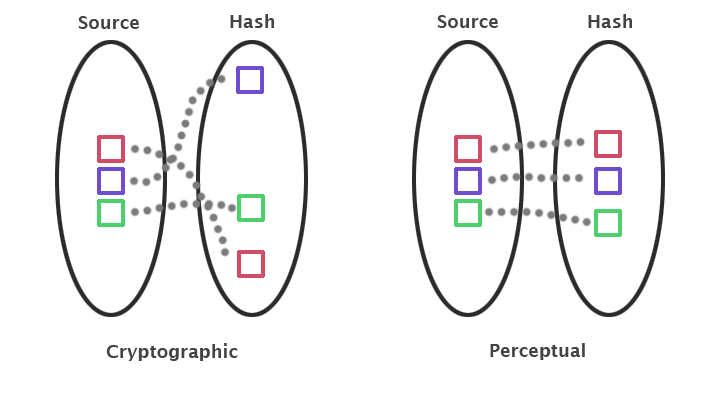

Diagram illustrating difference in hash space properties. Image

Credit,

Joe Bertolami

Diagram illustrating difference in hash space properties. Image

Credit,

Joe Bertolami

Perceptual Image Hash Background

A Perceptual image hash (PIH) is a short hexadecimal string (e.g. ‘00081c3c3c181818’ ) based on an image’s appearance. Perceptual image hashes, despite being hashes, are not cryptographically secure hashes. This is by design, because PIHs aim to be smoothly invariant to small changes in the image (rotation, crop, gamma correction, noise addition, adding a border). This is in contrast to cryptographic hash functions that are designed for non-smoothness and to change entirely if any single bit changes.

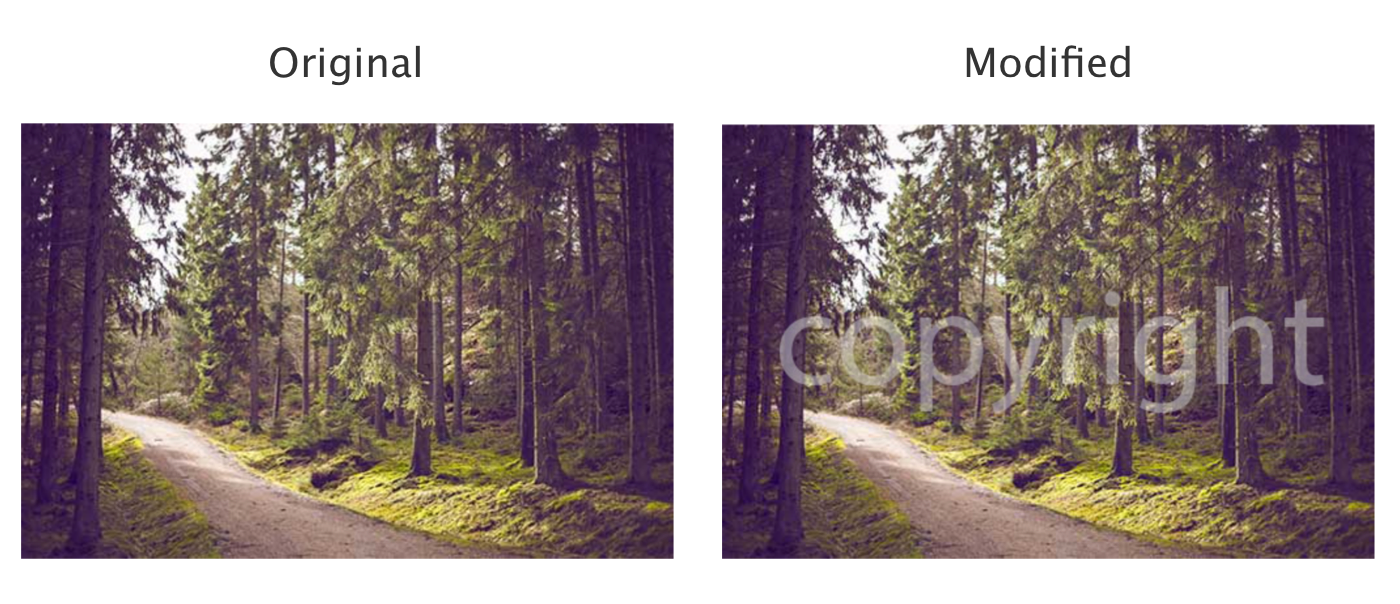

The perceptual hashes of the below images are only slightly changed by the text modification, but their md5 hashes are completely different.

Image Credit, Jens Segers

Image Credit, Jens Segers

a_hash(original) =

a_hash(modified) =

md5(original) = 8d4e3391a3bca7...

md5(modified) =

...

I won’t delve too much into the details of how these algorithms work: see (here) for more info.

Despite not being cryptographically solid, PIHs are still used in a wide-range of privacy-sensitive applications.

Hash Reversal Attack

Because perceptual image hashes have a smoothness property relating inputs to outputs, we can model this process and its inverse with a neural network. GANs are well suited for this generation task, especially because there are many potential images from a single image hash. GANs allow us to learn the image manifold and enable the model to explore these differing, but valid image distribution outputs.

I train the Pix2Pix network (paper,

github) to convert

perceptual images hashes computed using the a_hash function from the standard

python hashing library, imagehash

(github). For this demonstration, I

train on celebA faces dataset, though the black-box attack is general and

applicable to other datasets, image distributions, and hash functions. I arrange

the image hash produced by a_hash into a 2d array to serve as the input image

to the pix2pix model.

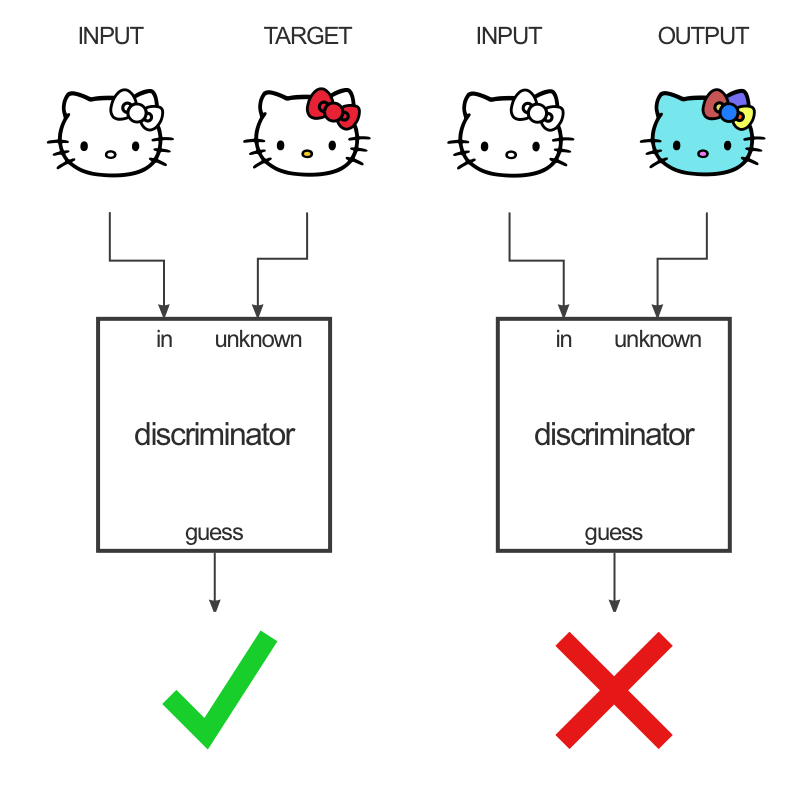

Pix2Pix Model Setup. Image Credit ,

Christopher Hesse

Pix2Pix Model Setup. Image Credit ,

Christopher Hesse

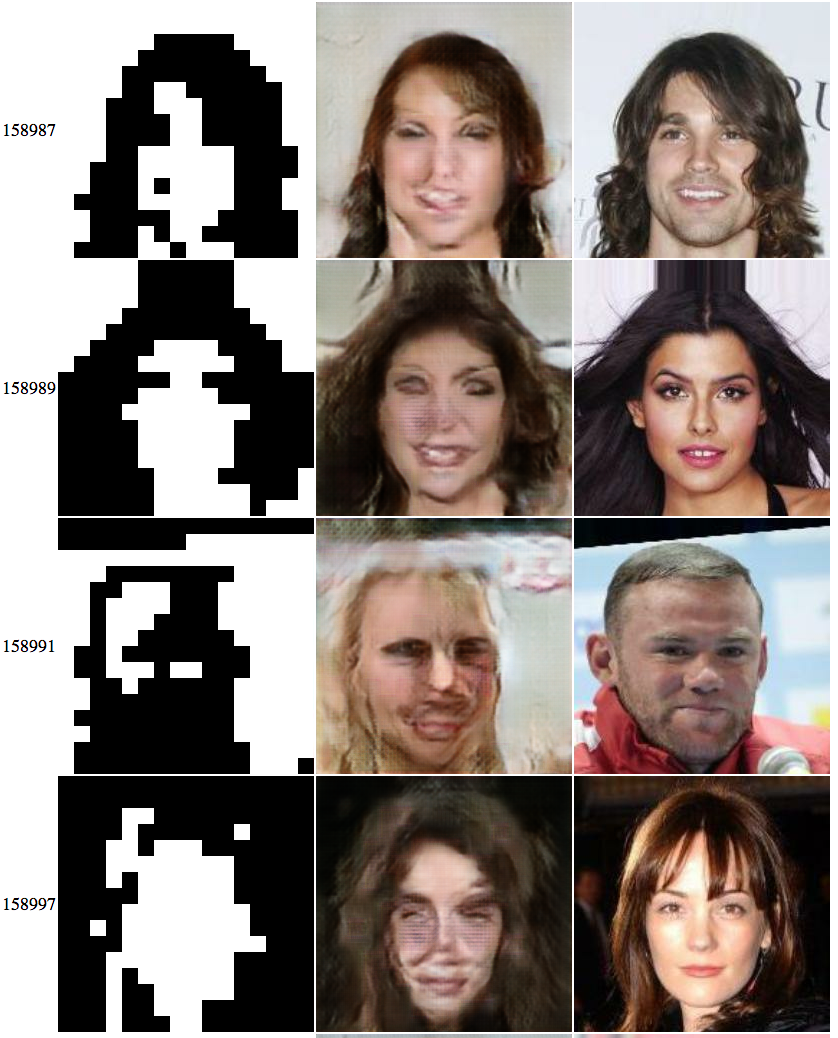

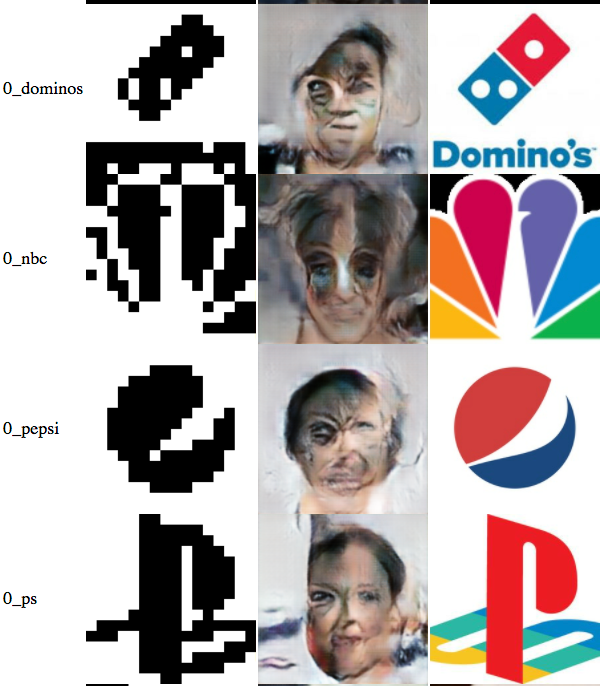

Below are some of the results of this Hash Reversal Attack. In many cases the attack is able to generate a look-alike face to the original, and even in failure cases often represents the correct gender, hairstyle, and race of the original image. Note: the face textures aren’t perfect as the model is not fully converged. This was trained on limited compute resources using Google’s *Colab Tool.*

Left: 2D representation of the hash, Middle: Reconstructed Image, Right:

Original Image

Left: 2D representation of the hash, Middle: Reconstructed Image, Right:

Original Image

Many applications assume these hashes are privacy-preserving, but these results above show that they can be reversed. Any service that claims security by storing sensitive image hashes is misleading its users and at potential risk for an attack like this.

Hash Poisoning Attack

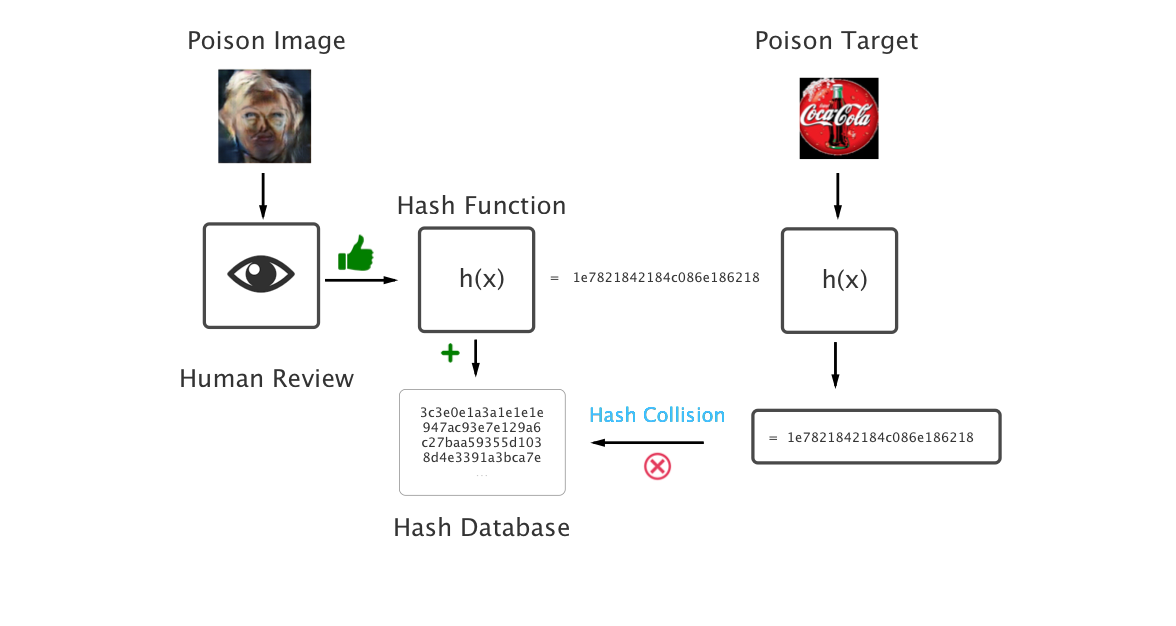

Hash Poisoning attack is relevant to the following scenario:

A system that allows users to submit photos to a database of images to ban. A human reviews the image to ensure it is an image that deserves banning (and that the image is say, not the Coca-Cola logo). If approved, this hash gets added to the database and is checked against whenever a new image is uploaded. If this new image’s hash collides with the banned hash, the image is prevented from being uploaded.

If a malicious user were to somehow trick the human reviewer into accepting the Coca-Cola logo as a banned image, the database could be ‘poisoned’ by containing hashes of images that should be sharable. In fact we just need the human reviewer to accept an image that has a hash-collision with the Coca-Cola logo! This human-fooling task can be accomplished with our learned generative model.

Hash-Cycle Loss for Stronger Guarantees of Hash Collision

In the described model, we can reverse hashes into approximates of their original images. However, these generated images do not always hash exactly back to the original hash. To apply this attack successfully, we have to modify the pix2pix objective slightly to ensure the operation is properly invertible and hashes the original image back to the true original hash.

I add an additional hash-cycle loss term to the standard pix2pix loss. This computes the hash on the generated image and computes a pixel-wise cross-entropy loss between the true hash and the generated image’s hash. In limited experiments, this additional loss term brings the generated hash collision rate from ~30% to ~80%.

Hash Collision Attack Model

Above is a diagram illustrating our network generating an image of a face that has a hash collision with the Coca-Cola logo. These share the same hashes and would allow a user to poison a hash database and prevent a corporate logo from being uploaded to a platform. Here are some more corporate logos with generated faces that are hash collisions.

Suggestions

Don’t use perceptual image hashing in privacy or content sensitive applications! Or at least don’t store them without some additional security measures.

A scheme that would be more secure is to generate many potential hashes for an

image (apply image transformations to create new hashes), put those hashes

through a provably secure hash function like md5 and store those hashed hashes

in your database to check against when matching future images.

A potential solution would be to not disclose which hash function is being used. But this is security by obscurity. It does not prevent someone with access to the database or codebase from reversing the stored hashes or poisoning the hash pool with the detailed attack.

Notes

Thanks to Duncan Wilson, Ishaan Gulrajani, Karthik Narasimhan, *Harini Suresh, and Eduardo DeLeon *for their feedback and suggestions on this work. Thanks to Christopher Hesse for the great pix2pix repository, and Jens Segers for the imagehash python library.

Liked this post?

If you enjoyed this article, please let me know with a clap or a share on Medium